- Home

- Various Articles - Exams

- Computerised or Face-to-face Oral Testing? A Tutor’s critical Reflection of Japanese Beginners’ Test Experience and Learner Anxiety

Computerised or Face-to-face Oral Testing? A Tutor’s critical Reflection of Japanese Beginners’ Test Experience and Learner Anxiety

Julian Chen is a senior lecturer in the School of Education at Curtin University where he teaches the postgraduate programmes in Applied Linguistics and TESOL. His research interests include technology-enhanced language learning, task-based language teaching and action research. His work has appeared in flagship journals such as Computers & Education, Computer Assisted Language Learning, Language Teaching Research, TESOL Quarterly, and The Modern Language Journal. Email: Julian.Chen@curtin.edu.au

Hiroshi Hasegawa is the Japanese Course Coordinator in the School of Education at Curtin University in Western Australia. He has an extensive teaching experience from primary to tertiary level. His main research interests include pedagogies of foreign language education, ethics in education and the ICT-led educational reform. Email: H.Hasegawa@curtin.edu.au

Teagan Collopy is a Ph.D. candidate in the field of Japanese Linguistics (Education) in the School of Education at Curtin University. She has a Bachelor of Arts in Japanese Language and Photography and Illustration design, as well as a BA Honours in Japanese Linguistics (Education). Her current Doctoral research involves Japanese youth language. Email: teagan.collopy@graduate.curtin.edu.au

Abstract

Despite its pedagogical benefits such as time-efficiency, uniformity, prompt feedback and reporting, computerised oral testing (COT) in languages other than English is nevertheless underutilized in the context of Japanese teaching and learning at the Australian university level. After a pilot study on the effectiveness of the COT delivery, the Japanese tutor’s critical reflection was investigated further via a follow-up survey and semi-structured interview. This reflective article reports on the tutor’s perspective as a COT assessor, its impact on students’ oral test performance including learner anxiety and administrative benefits, compared to her past experiences in employing the traditional face-to-face (F2F) oral interview test. The article concludes with the best practices and lessons learned from this COT approach that can be applied to other foreign language oral assessments using COT at the tertiary level.,

Background

Traditional F2F oral testing usually requires the presence of an oral interviewer, who is expected to carry out multiple tasks during testing (Larson, 2000)— not only testing what is taught, but also monitoring how students demonstrate their oral proficiency in performing different oral components of the target language, such as pronunciation and vocabulary (Amengual-Pizarro & Garcia-Laborda, 2017; Norris, 2001; Zhou, 2015). Bachman (1990) also notes that students are often anxious about speaking to an oral assessor face to face, which negatively affects their speaking performance (Kessler, 2010). In comparison, computer-aided testing has drawn increasing attention in foreign language education due to its time-efficiency, uniformity, and prompt feedback. It not only brings administrative benefits to academics, but also mitigates socioaffective factors (e.g., lowering learner anxiety, learner motivation) (Zhou, 2015).

Despite these benefits, computerised oral testing (COT) requires considerable financial support and staff training. Therefore, many stakeholders in current language other than English (LOTE) programs fall back on the traditional F2F oral testing instead (Douglas & Hegelheimer, 2007). To address these concerns, a pilot study on the effectiveness of COT delivery in the first-year Japanese program was conducted in our university. This involved investigating the impact digital testing has on Japanese beginners’ and other stakeholders’ perspectives (tutors, course coordinator). The goal was to establish a research portfolio and program improvement through piloting, and identify best practices that could be transferrable to a wider community—an oral proficiency model adoptable by other tertiary LOTE programs.

Accordingly, the tutor’s perspective of the trial with the COT system, compared to her experiences with the traditional F2F oral interview is reported. How the computerised program was developed, piloted and delivered is delineated. The tutor’s evaluation of the test modes, their impact on students’ oral performance and level of learner anxiety is presented, concluding with the pedagogical implications (strengths and caveats) of this implementation.

Implementation

To launch the first COT system for oral-assessment, we (the researchers) collaborated with the tutor to screen questions suitable for the beginners’ level. The content of the questions mirrored the lecture topics (e.g., shopping, family, weather) covered throughout the course. Questions included three types: 1) factual (e.g., dates, weather), 2) opinions (e.g., hobbies), and 3) descriptions (e.g., a pictured person’s features). After questions were modified, the tutor and software programmer started developing the COT program. It enabled randomization of video-recorded questions (mimicking the F2F format) assigned to each student. Former students were invited to comment on their COT experience. Their feedback was used for program improvement (e.g., content, navigation, interface and user-friendliness).

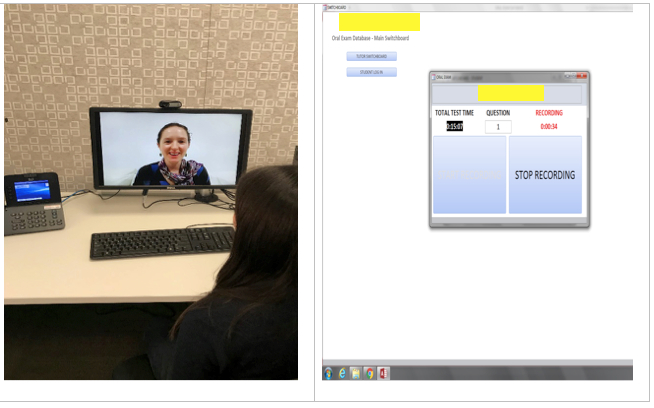

The comparison group (traditional F2F format) and the treatment group (COT program) consisted of randomly assigned students. They were informed that both testing modes were comparable: same questions, equal response time (30 seconds) and total test duration (16 minutes). Oral performances from both groups were audio-recorded for marking and research purposes. Figure 1 presents a student undergoing the COT, followed by the recording prompt interface.

Tutor’s reflection and evaluation

While having five years of experience teaching beginner-level Japanese, the tutor had never used COT in Japanese oral assessment until trialling the COT program for the first time in this study. Prior to this, the default format was predominantly F2F. To gauge the usefulness of the COT, we invited the tutor to take a survey and share her critical reflection in a semi-structured interview on the test outcomes and extent the two modes impacted students’ oral performance. The responses indicated both modes were perceived equally conducive to students’ testing experience. For example, the initial recording for self-practice (COT), and warm-up questions (F2F) helped ease students into the assessment, while visual stimuli (pictures) aided students’ question comprehension.

Regarding the usefulness of the testing modes, the tutor found COT delivered consistently and navigation was easy. The multimedia elements (i.e., audio, visual) also enhanced their overall test experience. Hence, it was recommended for implementation for future oral testing. Interestingly, her attitude towards F2F oral testing for future implementation remained neutral, as it was difficult for the tutor to maintain consistent delivery during an intensive period. Conversely, the COT could deliver all questions consistently in lieu of the assessor’s presence, regardless of the test-taker, which was echoed by her remark in the interview, “if you are conducting oral tests with a large number of students and the oral test contains a number of questions…very difficult to maintain consistently [intonation emphasis] over running exams face-to-face, over a long period of time…”. Because of the test consistency maintained in the system, “every student hears questions in exactly the same way, with exactly the same emphasis on particular words. Meaning that no student gets any advantage over any other student.” The consistency factor further translates into assessment marking as evaluating test takers’ oral performance consistently, reliably and cost-effectively is the ultimate goal of language tutors. Thanks to the computerised database, she expressed the benefits of capitalising on its features for marking:

it's a huge time saver…you can break down those questions and then make comparisons between the answers given by students to the individual questions. Which will result, I think, in less bias in the marking process because instead of assessing students overall on their test, you can assess them against each question, how they perform in each question and then produce a completely unbiased mark at the end of that marking process for each student.

This evaluation indicates how the COT can tackle the inconsistency typically encountered in the traditional F2F testing by making test format, delivery and marking consistently fair to the students.

Learner anxiety, a critical aspect to this project, was also evidenced. The tutor responded in the survey that student performance anxiety was reduced more in COT than in F2F (4 vs. 2 on a 5-point Likert scale). Despite the latter enabling the use of body language to prompt students’ oral responses, it also triggered learner anxiety:

…in the face-to-face format, they're looking for visual cues and body language from me to […] tell them how well they're doing or not … they also feel conscious that they are being watched…So they’re sort of uncomfortable speaking for too long…the challenge for the teacher to remain completely unbiased, not giving encouragement or discouragement to any particular students on any particular question…is very difficult to do when you're face-to-face with someone.

Compared to F2F testing, COT provides more flexibility, enabling self-paced control for the test-taker, thereby lowering learner anxiety. The tutor elaborated that students taking the computerised version “…felt more comfortable. They didn't feel pressured to answer immediately. So they had that ability to respond when they were ready rather than sort of feeling on the spot; ‘Someone's looking at me. I need to answer straight away.’” This contrast in testing modes vis-à-vis learner anxiety implicates the pedagogical and affective benefits in administering COT for Japanese beginners.

Final remarks

High-stakes F2F oral-testing usually results in negative washback on stakeholders due to its time-consuming administration and inevitable test anxiety. COT offers a cost-effective, consistent, less-threatening alternative to F2F testing. It renders the potential to facilitate test-delivery and reduce learner anxiety while providing reliability in marking that ensures unbiasedness. Finally, it is vital to offer enough orientation to students taking the computerised mode to allow for easier navigation and avoid technical discomfort. The tutor’s reflective evaluation of the practice allows us to look at this test alternative in a new pedagogical light.

Acknowledgement

The authors would like to express their gratitude to the tutor, E. Miller, for her professional assistance and involvement in this research project.

References

Amengual-Pizarro, M., & García-Laborda, J. (2017). Analysing test-takers’ views on a computer-based speaking test. Profile Issues in Teachers Professional Development, 19, 23-38. https://doi.org/10.15446/profile.v19n_sup1.68447

Bachman, L. F. (1990). Fundamental considerations in language testing. Oxford University Press.

Douglas, D., & Hegelheimer, V. (2007). Assessing language using computer technology. Annual Review of Applied Linguistics, 27, 115-132. https://doi.org/10.1017/S0267190508070062

Kessler, G. (2010). Fluency and anxiety in self-access speaking tasks: the influence of environment. Computer Assisted Language Learning, 23(4), 361-375. http://doi.org/10.1080/09588221.2010.512551

Larson, J. W. (2000). Testing oral language skills via the computer. CALICO Journal

18(1), 53-66: http://doi.org/10.1558/cj.v18i1.53-66

Norris, J. M. (2001). Concerns with computerized adaptive oral proficiency assessment.

Language Learning & Technology, 5(2), 99-105.

Zhou, Y. (2015). Computer-delivered or face-to-face: Effects of delivery-mode on the

testing of second language speaking. Language Testing in Asia, 5(2), 1-16.

http://doi.org/10.1186/s40468-014-0012-y

Please check the Pilgrims courses at Pilgrims website.

A Humanistic Approach to Teaching for Exams: Going Inside, Above and Beyond

Toni Le (Hung Hoang Le), VietnamComputerised or Face-to-face Oral Testing? A Tutor’s critical Reflection of Japanese Beginners’ Test Experience and Learner Anxiety

Julian Chen, Australia;Hiroshi Hasegawa, Australia;Teagan Collopy, Australia