- Home

- Various Articles - Tertiary Education

- Triple-A Writing Analysis: Analyzing the Message, the Complexity of Language, and Fluency of Expression

Triple-A Writing Analysis: Analyzing the Message, the Complexity of Language, and Fluency of Expression

Miriam writes to us from British Columbia, Canada where she has taught from Kindergarten through Grade Seven in English and French since 1984 and has worked as a Special Education teacher for 15 years. This article was written in partial fulfilment of her scholarly portfolio for her studies towards a PhD in Education. Email: miriamsemeniuk@gmail.com

Abstract

Triple–A writing analysis examines three constructs in all academic writing: the message written, the complexity of language used to convey that message, and the fluency of expression in writing all types of text genres. Repeated analyses of student writing integrate empirically derived, binary choice, boundary definition ratings (Turner & Upshur, 1996) in assessment–decision pathways, making this a highly individualized approach to formative assessment. Multiple analyses of written texts can support individual students at their point of need in acquiring knowledge, in perfecting the language used to express their ideas, and in achieving high–level writing.

Introduction

This article presents a three–stage assessment of student writing in extending the empirically derived, binary choice, boundary definition rating scale (EBB) (Turner & Upshur, 1996; Baker & Newman, 2023), for use in formative classroom assessment. EBB scales were originally prepared as screening tools for assessing students’ abilities for including top performers in educational programs. In the current application, three analyses (triple–A) of student writing integrate assessment–decision pathways whereby instructional decisions are based on the needs of each learner according to the assessment criteria for each piece of writing.

In preparing a rating scale, a series of critical questions developed for assessing written texts are used to classify the texts into two piles for high or low achievers based on the characteristics of the performance, differentiating low from high level writing (e.g., Was an opinion stated? Yes/no? Were reasons given to support the opinion? Yes/no?). The two piles are further examined using a second critical guiding question to distinguish between samples in both piles to identify the highest achievers which will determine which candidates are accepted to the program. The results can also be used for assigning grades by creating a grading scheme in which writing samples meeting all or nearly all the criteria receive A+ or A, and those in need of refinement receive lower grades.

In Triple–A analyses, the binary model includes three critical questions in three applications using a formative approach in assessing individual students’ work. Each text is analyzed three times with three purposes: analysis of the message, complexity of language, and fluency or coherence. Different from using the rating scores for admission to programs as intended, key questions (KQ) are used to guide discussions in brief conferences one–on–one with students. Ongoing descriptive feedback is given—corrective feedback that is meaningful, clear, and vital in supporting improved learning and for setting specific short– and long–term learning objectives.

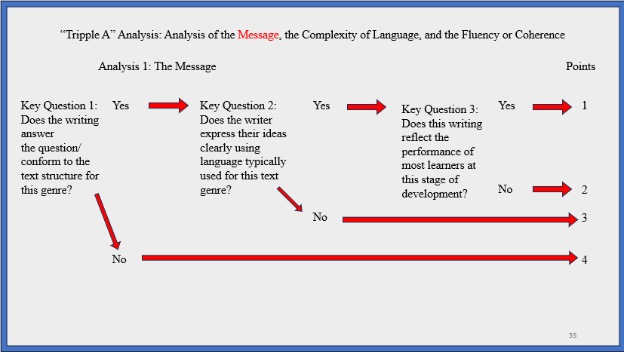

To illustrate, the first set of critical questions focusing on the message are displayed in an assessment–decision pathway as follows (see Figure 1):

Figure 1

In analyzing the message, KQ 1 is: Does the writing answer the question or conform to the text structure? If yes, then, the student’s writing can be analyzed a second time with KQ 2 which is: Does the writer express their ideas clearly using the language used? Advanced students will typically have checked their work using a word processor or bilingual word checker. For these students, their work will then be evaluated according to KQ 3 which is: Does this writing reflect the performance of most learners at this stage of development? for which most learners’ writing will be on par with their peers’.

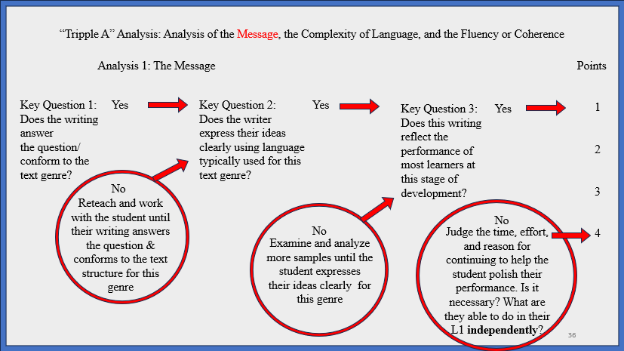

However, students whose work did not meet the criteria in step 1 require further teaching, modeling, and practice in writing. An intervention flow chart would include instructional decisions as described in the circles below as is shown in Figure 2.

Figure 2

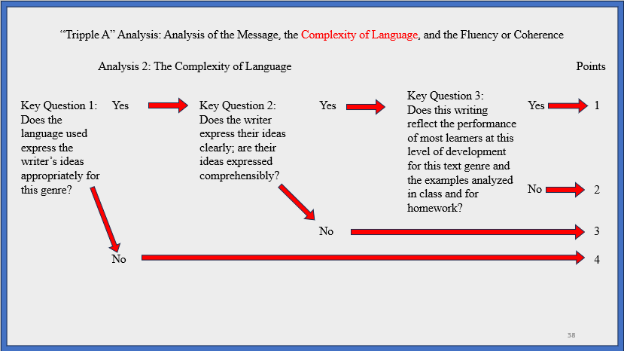

The second analysis is the complexity of language for which we verify which genre is being instructed to attend to the specific language needed to write (an argument, poetry, recipes/procedures, historical timeline, etc.). The analyses continue in the same sequence examining the complexity of language used with three key questions. KQ 1 assesses whether the student understands the genre of writing: Does the language used express the writer’s ideas appropriately for this genre? (Yes/No). Once their understanding of the type of text is established, KQ 2 assesses the clarity of writing by asking: Does the writer express their ideas clearly; are their ideas comprehensible? (Yes/No). Then KQ 3 assesses the mastery of the text genre in comparison with understanding shown by other learners and asks: Does this writing reflect the performance of most learners at this level of development for this text genre and the examples analyzed in class and for homework? (Yes/No). The aim is to have learners compose strong pieces of writing and powerful responses while attending to elements of language (grammar, vocabulary range/control).

The second set of critical questions focusing on the complexity of language are displayed in an assessment–decision pathway as the one presented below (see Figure 3):

Figure 3

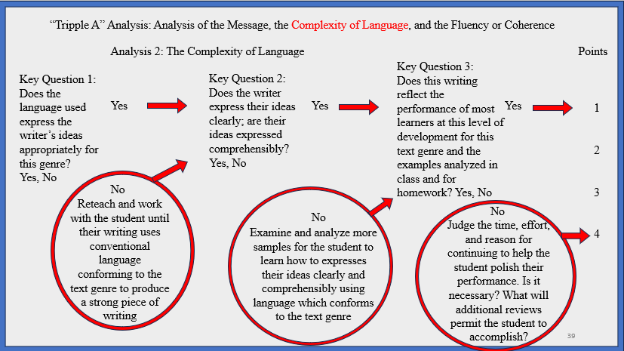

Individual assessment conferences with students in need of additional modeling on the use of complex language structures and assistance in editing their work would be subjected to an intervention following instructional decisions as displayed in the flow chart here (see Figure 4):

Figure 4

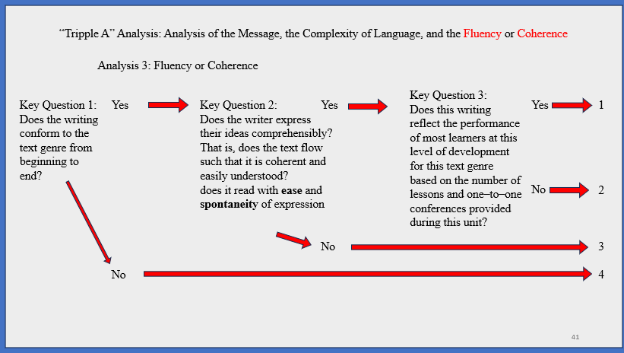

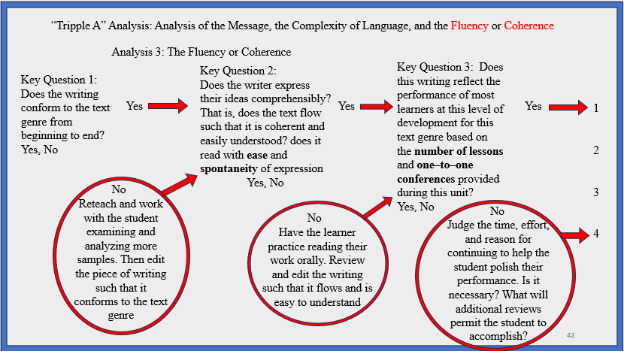

The third analysis is fluency or coherence, seeing if the writing reads smoothly from beginning to end, if it is comprehensible, and whether it reflects the level of development in writing aimed for based on the number of lessons given and the feedback provided. A sample assessment–decision pathway for fluency in expression is shown below. The writing must conform with the type of text studied. Therefore KQ 1 asks: Does the writing conform to the text genre from beginning to end? KQ 2 further analyses how the entire piece of writing flows by asking: Does the writer express their ideas comprehensibly? Does the writing read with ease and spontaneity of expression? That is, does the text flow such that it is coherent and easily understood?

Finally, a student’s overall writing skills are assessed in comparison with the writing of others in the class and the level to which feedback given in assessment conversations (i.e., individual conferences) is integrated into different revisions. As presented in Figure 5, KQ 3 asks: Does this writing reflect the performance of most learners at this level of development for this text genre based on the number of lessons and one–to–one conferences provided during this unit?

Figure 5

An assessment–decision pathway is designed as shown below, and this completes the three analyses (see Figure 6). The decision to continue helping low achieving students is made depending on their performance and their responses to the interventions provided as increasingly complex text structures are refined.

Figure 6

Conclusions

Conforming to Field’s (2013) definition of cognitive validity, this adaptation of the binary model assures that assessment tasks elicit a set of processes that resemble proficient use in the real world, extrapolating literacy skills from texts studied, and that the processes of writing, editing, and refining the language used are fine grain assessed and graded across increasing levels of cognitive demands.

With the aim to optimize student performance, the iterative process of revising texts with individual learners offers authentic, efficient, reliable, and valid classroom–based assessment. Triple–A analyses are one way all learners can be brought closer to meeting program expectations, learning goals, and improve their educational and career opportunities.

References

Baker, B., & Newman, H. (2023). EBB rating scale bootcamp. Workshop presented at the 19th European Association for Language Testing and Assessment (EALTA) Conference on Sustainable Language Assessment: Improving Educational Opportunities at the Individual, Local, and Global Level (13‒18 June 2023) in Helsinki, Finland.

Black, P. J., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education: Principles, Policy and Practice, 5(1), 7–74. https://doi10.1080/0969595980050102

Field, J. (2013). Cognitive validity. In A. Geranpayeh & L. Taylor (Eds.), Examining listening: Research and practice in assessing second language (pp. 77–151). Cambridge University Press.

Turner, C. E., & Upshur, J. A. (1996). Developing rating scales for the assessment of second language performance. Australian Review of Applied Linguistics. Series S, 13(1), 55‒79. https://doi.org/10.1075/aralss.13.04tur

Please check the Pilgrims in Segovia Teacher Training courses 2026 at Pilgrims website.

Triple-A Writing Analysis: Analyzing the Message, the Complexity of Language, and Fluency of Expression

Miriam Semeniuk, country CanadaEFL Students' Perceptions toward the Use of ChatGPT as Writing Assistance

Tran Thi Thanh Mai and Vo Tran Quynh Thy, VietnamMMO Gaming and Netflix During the COVID-19 Pandemic: The Potential Influence on the Generation of Obsessive-Compulsive Behavioral Patterns within Belgrade University Students

Nebojša Damnjanović, Serbia