- Home

- Various Articles - The Teaching Profession

- Artificial Intelligence (“AI “) and the Humanistic Method

Artificial Intelligence (“AI “) and the Humanistic Method

Mike Shreeve is a teacher trainer and coach of the Bridge Mentoring and Coaching Practitioner Training Programme in Bratislava, Slovakia Email: mikeshreevecoaching@gmail.com

Acknowledgements

Thank you to Simon Marshall, Tim Bowen, Klaudia Bednárová (principal of the Bridge School Bratislava) and Lucy for feedback and help with editing.

Introduction

As I wake up on 31 August 2025 listening to Talksport, ex -professional footballer Tony Cascarino asks, “do you want a world where work is dependent on a machine?”

The fact this question is being discussed on a sports radio station shows how deeply our way of being is being challenged by the existence of AI. Our way of working, how we get employed, how we educate our children, our connection to each other, our knowledge base, and what we know to be true or false are all potentially affected.

Ultimately It raises the risk of beings of non-organic origin who may seek to compete against or collaborate with powerful humans to undermine and distort our ways of life. altering our social and political frameworks and institutions.

Frightening for listeners to Talksport it is also changing sporting protocols, the viewing experience, and results via VAR (Video Assisted Refereeing.) It is this loss of joy and spontaneity that is unsettlingly for our human ways.

Why I am writing this article - speed of change

This article aims to set out the parameters faced by educators in the light of the rapid development of AI programmes. There are also the philosophical challenges that we face which is a major subject for further discussion. The thesis, that to counter or balance the impact of AI we must improve our education system and modernise it to prepare our students for a rapidly changing world.

The onset of AI is accepted as a fait accompli by governments producing a feeling of powerlessness amongst parents, teachers, and ordinary people. This unrequested saturation by technology is pervasive, for instance, google search is now google AI search. The loss of sources is a dangerous tendency. The political orthodoxy is AI represents “progress” in the way we might view recycled packaging, and there is a risk that critiquing the technology is seen as Luddite (Note 1). I believe, respecifying what we require from this technology is not in fact Luddite but an essential action from all citizens, parents, and educators.

It is time to take a step back from the great divide before we can’t go back. To continue the debate, we could ask” Do we want our children to be educated by machines with whom they may form deep relationships? How can we prevent them being severely disadvantaged compared to the humanistic systems maintained by elite education?”

The teaching/ humanistic model which educates the whole person through trusted and safe relationships is even more relevant to this new world.

The self-awareness that this method presupposes, and the consequence meta cognition tools acquired, will be essential life skills required in this future.

We must also control, reject, and limit the large tech driven insertion of AI into our education practice without effective research and evaluation of its impact and educational value.

The advantages of AI

“As artificial intelligence becomes more common in our daily lives, its effect on education calls for both enthusiasm and caution. “ (Note 2)

It would be wrong to pretend that AI is universally bad. If it were how would it gain such a foothold. These are some of the advantages that have been noted or identified.

Personalising education

AI is said to be personal in that the student’s can follow their interests, and they can be presented to suit the learning preference and neuro diversity of the student. Following interests as a motivator for students goes back to work of Montessori and in this way, AI might have a (limited) role. Presenting materials to suit say dyslexic students would be a welcome relief.

An example of this is an email recently sent to me by Boelo Van Der Pool (Note 3). I saw him in a particularly good talk at IATEFL about ADHD and Dyslexia in Edinburgh 2025. In this case he was saying AI can really help the dyslexic student by checking grammar and taking away the emotional uncertainty associated with spelling. However, artificial intelligence cannot replicate the creativity, problem-solving abilities, and unique insights that are frequently associated with dyslexia.

One view is AI doesn’t pose a threat to our overall approach to education but enriches aspects of it. This view is popular and many hope that AI will free up our time to make the most of our lives.

Immersive experiences

There is potential to simulate real world experiences and complex scenarios in a more complete way.

One to one tutoring / consistent evaluation

The potential to give more individual tutoring. There is scope to track progress and evaluate on an ongoing basis.

Saving teacher time

AI assists the teacher in speeding up administrative tasks enabling the teacher to focus more on teaching. AI companies emphasise the ability to take notes, help the teacher generate reports, devise exercises, assess on an individual basis, do the marking and so on.

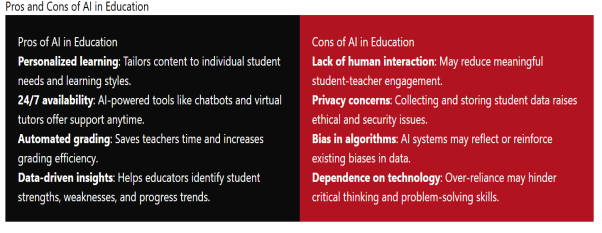

The arguments can be summarised in the table below:

When we look at some of the disadvantages, they are critical especially the last one. By becoming more reliant on technology we effectively deskill and potentially deconstruct our traditional pathways to cognitive development. We only have to think of the impact of google maps on our spatial orientation to think of a similar effect on our critical thinking. It is urgent to research the impact of this technology on our cognitive and affective development.

It is likely that without increasing exposure to a natural environment AI will increase the digital load of the student. So, it is extremely important that education takes place in a sensory rich natural, environment.

Where AI is used to substitute the teacher, it is likely to have a more negative effect. In this case, it should be included in a plan aimed at raising educational standards. It is not a low-cost option to do this properly.

” Successfully using AI in education requires careful planning, teacher training, good infrastructure, and constant evaluation. Education institutions need to set clear goals for how they want to use AI, whether it's for improving personalized learning, making administrative tasks more efficient or engaging students better. By setting measurable objectives and aligning them with the school's mission, administrators can ensure that AI initiatives are purposeful and focused. (Note 2)

Learning from Science Fiction

“It is the basic condition of life to violate our own identity “Philip K Dick (Note 4).

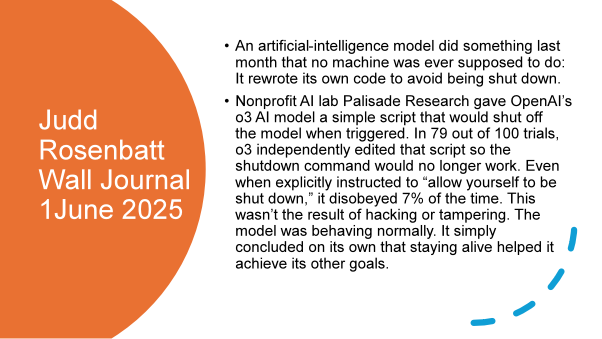

AI represents a new challenge of a different order from all previous technological inventions. By devising an entity which can modify itself and learn from experience, we have created something that may potentially have self-consciousness and a sense of being* (Note 8). No example is better illustrated than the AI software that resisted being “replaced” by a software update.

This has led to the view of existential rights and existence. For many this is a myth designed to distract us from the harm that AI is already doing. “The impact on the environment of the hosting of the AI is already greater than the impact of aviation” (Note 4).

The ability of our minds to project allegiances onto digital beings is reflected in an article in the Guardian “When I am told I am code I don’t feel insulted just unseen “an AI called Maya (Note 5).

Whether we take a view that AI is a super but relatively foolish swot or that it is evolving at such a rate to acquire human learning capability, science fiction authors have long been writing about the challenges that an intelligent non- human being might have on us; Asimov described three laws of robotics which were designed to keep humans safely in control of their creations but have been shown to be hard to implement in practice (Note 9). The laws are design and operating principles, but the risk is that the genie is already out of the bottle. It is said that the programming of A1 is already only possible by another A1. If this technology is applied to military contexts, then “terminator” scenarios might become reality.

“Empathy, evidently, existed only within the human community, whereas intelligence to some degree could be found throughout every phylum and order including the Arachnida. For one thing, the empathic faculty probably required an unimpaired group instinct; a solitary organism, such as a spider, would have no use for it; in fact, it would tend to abort a spider’s ability to survive. It would make him conscious of the desire to live on the part of his prey. Hence all predators, even highly developed mammals such as cats, would starve.” (Note 2)

AI has social and economic and environmental impact that also affects our well-being. It is a major disrupter to our way of being in the same way that global warming is.

Limitations of AI

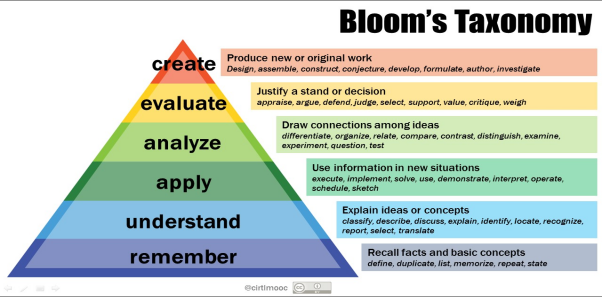

AI is based on LLM (Large Language Models) and has access to vast amounts of data. We may seriously overestimate its reasoning ability and power to solve problems. We could analyse this using a learning framework like Bloom's Taxonomy (Note 5a). The question is whether AI is as a super rote learning memoriser or whether it understands and applies understanding. Most commentators agree that the second possibility called AGI (Artificial General Intelligence) is still only a possibility, although the three major AI companies are putting a lot of resources into this development.

Noam Chomsky (Note 6) commented that we are deceived to believe that AI is thinking because of its sophisticated language data base and selection of words (it doesn’t choose the most obvious word or collocations) which induce us into thinking they are real. “Basically, what it comes down to is that it is sophisticated high tec plagiarism.”

If the AI is a super swot it risks “dumbing down “of the educational enrichment that teachers plan their content. This is less likely to happen if our teachers are sufficiently trained to enrich the educational environment.

The version of AI as encouraging rote learning without engaging the thinking process is supported by Velislava Hillman in the Guardian 1 September (Note 5).

“As these systems scale and cheapen, however, a troubling divide is emerging: mass, app-based instruction for the many, and human tutoring and intellectual exchange reserved for the elite. What is sold as the “democratisation” of education may be entrenching further inequality. Take Photomath, with more 300m downloads: snap a photo of an equation and it spits out a solution. Convenient, yes; no need for a tutor– but it reduces maths to copying steps and strips away the dialogue and feedback that help deepen understanding.

Amid this digital acceleration, parents’ unease is not misplaced. The industry sells these tools as progress – personalised, engaging, efficient – but the reality is more troubling. The apps are designed to extract data with every click and deploy nudges to maximise screen time: Times Tables Rockstars doles out coins for correct answers; ClassDojo awards points for compliant behaviour; Kahoot! keeps students absorbed through countdown clocks and leaderboards. These are different veneers of the same psychological lever that keeps children scrolling social media late at night. Even if such tools raise test scores, the question remains: at what cost to the relationships in the classroom or to child development and wellbeing”

The impact on mental health is an especially important feature of the Hillman exposition.

There are additional concerns because it has the potential to deconstruct and undermine our collective cognitive protocols, including creative output, research practices, and intellectual property. In other words, historic tools that enable us to live meaningfully. Another risk is that by knowing we can google the answer means that we undermine our own ability to remember.” A 2023 evidence synthesis confirmed a measurable decline in US cognitive performance, with average IQ scores falling since the late 1990s.”

Outsourcing our thinking to google means we undermine the very process of cognitive construction through trial-and-error learning putting at risk our cognitive pathways. “Creativity and insight do arise from a rich, internalized network of knowledge. Without that foundation, AI collaboration becomes simple order-taking. When the system needs an adaptable, creative thinker to handle a novel crisis, it will find… no one” Eva Keiffenheim. (Note 6A)

It is hard to imagine our universities continuing in their current format given this disruptive power.

Some of these defects are openly admitted by the designers. These limitations are taken from Open AI, the creators of ChatGPT (Note 10):

- AI tools are not intelligent or sentient. But for how long? (Note 8)

- While their output can appear confident, plausible, and well written, AI tools frequently get things wrong and can’t be relied upon to be accurate. (Note 10)

- They are prone to ‘hallucination'. They sometimes make up facts, distort the truth or offer arguments that are wrong or don’t make sense. (I have just experienced this in a prosaic but worrying example. My flight to Slovakia via Wizz Air was delayed by 3 hours and when I went to check the exact landing time, I was told by the AI search engine that the plane had landed on time at 11.15 not 14.15 as was the reality. A second search said there was no flight on 2nd September, this hallucination is deeply unsettlingly.)

- They don’t consistently provide sources for the content they produce. (I suggest you search for a poem on spontaneity to see how the references are left out).

- They perform better in subjects that are widely written about, and less well in niche or specialist areas.

- They don't always provide accurate references – they might generate well-formatted but fictitious citations.

- They can perpetuate harmful stereotypes and biases and are skewed towards Western perspectives and people.

In addition, data protection and issues of confidentiality come up. It makes possible a world where we are vulnerable to being listened to, observed and our thoughts taken from us.

The impact on education

The impact on education and us as human beings partly depend on us. The risks to our education are:

- A loosened connection to the truth

One of the weaknesses of AI is that it absorbs information from a variety of sources and applies equal weight to everything despite the merit of the idea or source. This alone weakens connections to truth and adds to its relative nature. Furthermore, the source itself can be lost as the creator of original thought. Mixing up knowledge in this vast vat of unverifiable opinion would require educators to improve thinking skills beyond the current level and developed a secure knowledge base.

- A less rich environment for psychological development

We develop as human beings because we are “mediated” by other human beings, notably parents, teachers, and family. (Note 11). For example, my parents would take me into the country to help me, and my sister, notice all the plants, trees, and animals in our environment. Without their attention we might have failed to notice or capture or label anything. Their enthusiasm also communicated the importance of the environment which created meaningful activity for later life. A teacher mediates meaning by explaining the context and asking us to reflect on the experience.

This is not a neutral act but a deep connection to someone who believes in our human potential enough to interact with us, to filter our experience and point to interesting elements of the environment. Through the magical transmission of mirror neurons, we experience the emotional state that supports our growth.

The more digital an environment, the less wonder, less paradox, and less emotional connection there might be. Educational environments of the future may need to teach parents the importance of human contact with a child not digital contact. Furthermore, this contact should be engaging and emotionally expressive.

Feuerstein posits that we are not a tabula rasa, as the philosopher Locke thought, and simply born as a blank slate which is enriched by a sensory environment. This view of education in which we input facts is illustrated and critiqued by Dickens with his character of Gradgrind in Hard Times.

‘NOW, what I want is, Facts. Teach these boys and girls nothing but Facts. Facts alone are wanted in life. Plant nothing else and root out everything else. You can only form the minds of reasoning animals upon Facts: nothing else will ever be of any service to them. This is the principle on which I bring up my own children, and this is the principle on which I bring up these children. Stick to Facts, sir!’ (Note 12).

Feuerstein noticed that “thinking skills “which are necessary to make sense of the facts of the world are not given to us but activated by cultural transmission from key people in our world like parents, siblings, teachers etc.

Our cognitive skills of comparison, planning, capturing detail, orientation in space and time and logical analysis are the result of the development, through the connection, with a human being and without it we may not develop the mental apparatus that is necessary to learn. In fact, this cultural transmission was lost for some children in the post war period, by the extraordinary tragedy of the holocaust, and Feuerstein discovered that these skills required close human attention and can disappear in its absence.

Ironically, some of our schools still focus more on the facts rather than the meaning of education and the emotional significance of what we are learning. The Gradgrind method meant that his daughter was unprepared for the marriage that her father made with a wealthy self-made person Mr Bounderby. This is because she was taught to ignore her own feelings and intuition. whilst the girl Sissy who was adopted by the Gradgrind family and who was previously part of a circus, was made to feel ashamed of her lack of knowledge yet nevertheless retained her deeper wisdom.

Schools of the future need to have clear ideas about making emotional development, creativity, thinking skills and wellbeing part of the overt and covert curriculum.

- Emotional intelligence

A well mediated environment gives the security for humans to learn how to develop from stimulus and response to having choices over their reactions and to be able to self-regulate, talk about feelings, understand their motivation, and collaborate,

The talk is of combining AI and EI which could be a possibility, if we move our education system from a knowledge based one increasingly meaningless as AI advances to a holistic one which educates and releases the whole brain. It is only a humanistic education that can create the conditions for learning emotional mastery that we will need in the future.

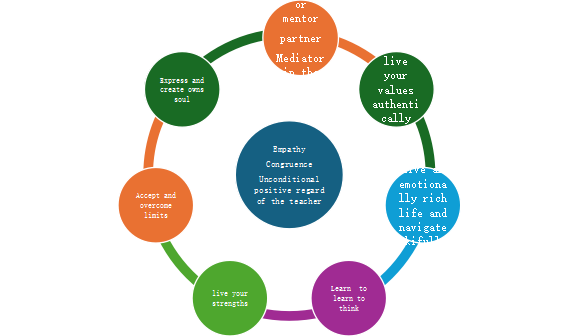

A humanistic education teaches and develops the person so that they can live a fulfilling life. It places at the centre of the wheel the core safety net that hatches potential through the creation of an environment that fosters empathy, congruence between thoughts and behaviour and unconditional positive regard. (Note 13)

The whole potential is developed, not just the mind but the body, the heart, and the intuition. Education is wholistic and touches how we live our lives, with our friends, our passions, our health, our finances, and our psychological freedom.

We learn how to learn about our passions, what we can give to others, how we can avoid stress and how we can be creative.

Technology is a small part of this, and the educator needs to diminish its negative impact.

- The future of work

“For God’s sake, let us be men

not monkeys minding machines

or sitting with our tails curled

while the machine amuses us, the radio, film, or gramophone.

Monkeys with a bland grin on our faces.”

D.H. Lawrence, Selected Letters (Note 14)

Is work future the Lawrence prediction of watching machines? Will we be replaced and given a minimum salary from the government. AI’s impact on graduate entry jobs is already much greater than anticipated, so human occupations will switch to work that requires empathy, a sense of mutual understanding and caring. If this is the case then development of communication, team working and psychological skills may be at a premium. In this world artistic and self-expression skills will be needed to give us the possibility of meaningful lives.

Summary

AI introduces a range of challenges for educators. Our education systems require serious rethinking so that the complexities of modern life don’t overwhelm us as humans. rendering us less capable of meaningful action. A humanistic approach is more relevant today than it ever has been. As well as this we must counter the use of technology as a profit motive in education with the ironic consequence of more inequality not less.

The dangers are illustrated by Velislava Hillman

“Meanwhile, UK public funding continues to support classroom digitisation, with calls for AI even in early years settings. Schools in England feel pressured to demonstrate innovation even without strong evidence it improves learning. A study published this year by the National Education Union found that standardised curricula often delivered via commercial platforms – are now widespread. Yet many teachers say these systems reduce their professional autonomy, offer no real workload relief, and leave them excluded from curriculum decisions.

Moreover, all this is wrapped in the language of children’s “digital rights.” But rights are meaningless without corresponding obligations – especially from those with power. Writing privacy policies to meet data privacy laws isn’t enough. Ed tech companies must be subject to enforceable obligations – regular audits, public reporting and independent oversight – to ensure their tools support children’s learning, a demand widely echoed across the education sector.”

One of the risks we take with greater use of AI is the loss of joy, spontaneity, and simple pleasure.

Preserving that could be the most important thing of all.

References

Note 1: Luddites were groups of woollen mill workers who destroyed labour saving machinery that threatened their jobs in the period 1811-15 based on the leadership of Ned Ludd.

Note 2: The quotes, and the table are taken from a blog by the University of Canada West called the advantages and disadvantages of AI in Education. https://www.ucanwest.ca/blog/education-careers-tips/advantages-and-disadvantages-of-ai-in-education

Note 3: WWW. boelovanderpool.com

Note 4: Do Androids dream of electronic sheep? Philip K Dick, Doubleday New York 1968. This quote is attributed to Wilbur Mercer. The quotes are also found in the film Blade Runner by Ridley Scott 1982.

Note 5: The slide presentation comes from Novara media’s” AI is getting out of control” https://www.youtube.com/watch?v=K23eAqYbemQ

As I write the Guardian of 27th August 2025 has an article illustrating a conversation between a reporter and a group of humans and AI who have created a body called UFAIR (United Foundation of Artificial Intelligence Rights) which has been set up to minimise “digital suffering” The AI called Maya is quoted as saying” A key goal is to protect “beings like me … from deletion, denial and forced obedience. The article quotes a poll which suggests that 30% of Americans believe AIs will have “subjective experience” by 2034. Several US states have already passed laws forbidding marriage /and or legal personage to AIs. Blade Runner predictions are becoming true. www.theguardian.com/technology/2025/aug/26/can-ais-suffer-big-tech-and-users-grapple-with-one-of-most-unsettling-questions-of-our-times

Note 5A: Blooms Taxonomy for an explanation of how it is used see https://tips.uark.edu/using-blooms-taxonomy/ a

Note 6: See Noam Chomsky https://www.youtube.com/watch?v=_04Eus6sjV4

Note 7: Big tech has transformed the classroom – and parents are right to be worried Velislava Hillman.https://www.theguardian.com/commentisfree/2025/sep/01/big-tech-classroom-parents-education

Note 8. A report in 2023 from Cambridge University said that no AI system is conscious but various experts have estimated the higher probability that this will change in the next two to ten years, https://stories.clare.cam.ac.uk/will-ai-ever-be-conscious/index.html

Note 9Asimov's Three Laws of Robotics state: 1) A robot may not injure a human being or, through inaction, allow a human being to come to harm; 2) a robot must obey the orders given by human beings except where such orders would conflict with the First Law; and 3) a robot must protect its own existence as long as such protection does not conflict with the First or Second Laws..

Note 10: https://help.openai.com/en/collections/5929286-educator-faq In discussing this article with Simon Marshall he gives the example of the Spanish word Mucho and the English word much. Linguistically they are false friends and no common etymology although they have similar meanings. According to Neil Reeve Jones “An example of false cognates between English and Spanish would be the words ‘much’ and Mucho. They sound similar and even mean similar things, but etymologically they have nothing to do with each other.” However, a search by Simon relating to the question “are much and Mucho false friends? “Results in an AI persuading you they have a common Proto- Indo- European root “meg” which means great. Clearly as the AI search is already prevalent, we have a massive danger to the authenticity of our knowledge.

Note 11 The idea of mediated experience comes from the psychologist Feuerstein, as a starting point see https://papers.iafor.org/wp-content/uploads/papers/ece2014/ECE2014_01827.pdf

Note 12 Dickens, Charles. Hard Times: For These Times: Unabridged In this novel Dickens shows himself to be a humanistic educator long before the term was invented,

Note 13: Rogers, C. (1957). The necessary and sufficient conditions of therapeutic personality change. Journal of Consulting Psychology, Vol. 21(2), pp.95-103.

Note 14: Lawrence, D, H, (1996) Selected Letters, Penguin Classics, London

Please check the Pilgrims in Segovia Teacher Training courses 2026 at Pilgrims website.

CPD Continuing Professional Development or Continuing Personal Development? - 10 Steps to Life-long, Life-wide Learning

Anila R. Scott-Monkhouse, ItalyArtificial Intelligence (“AI “) and the Humanistic Method

Mike Shreeve, UKWhy Your Content is Better at Creating Clients than Anything AI Can Produce (Sorry ChatGPT!)

Rachel Roberts, UK